At Giant Swarm our Giantnetes (G8s) platform runs Kubernetes within Kubernetes and is built using microservices and operators. You can read more about Giantnetes in a post we did for The New Stack earlier this year.

We’ve been developing with microservices for a long time but operators are a much newer concept and the term was originally coined by CoreOS. An operator is a custom controller for automating tasks within Kubernetes and usually uses Third Party Resources (TPRs). TPRs are a way of extending the Kubernetes API to manage custom resources.

So far we’ve released two operators for Kubernetes, our aws-operator launches Kubernetes clusters on AWS and cert-operator issues certificates using Vault. Soon we’ll be open sourcing our kvm-operator which launches Kubernetes clusters in VMs on bare-metal. This post describes what cert-operator does and why we built it. It also introduces operatorkit, which is a library we’re developing to make it easier to create operators.

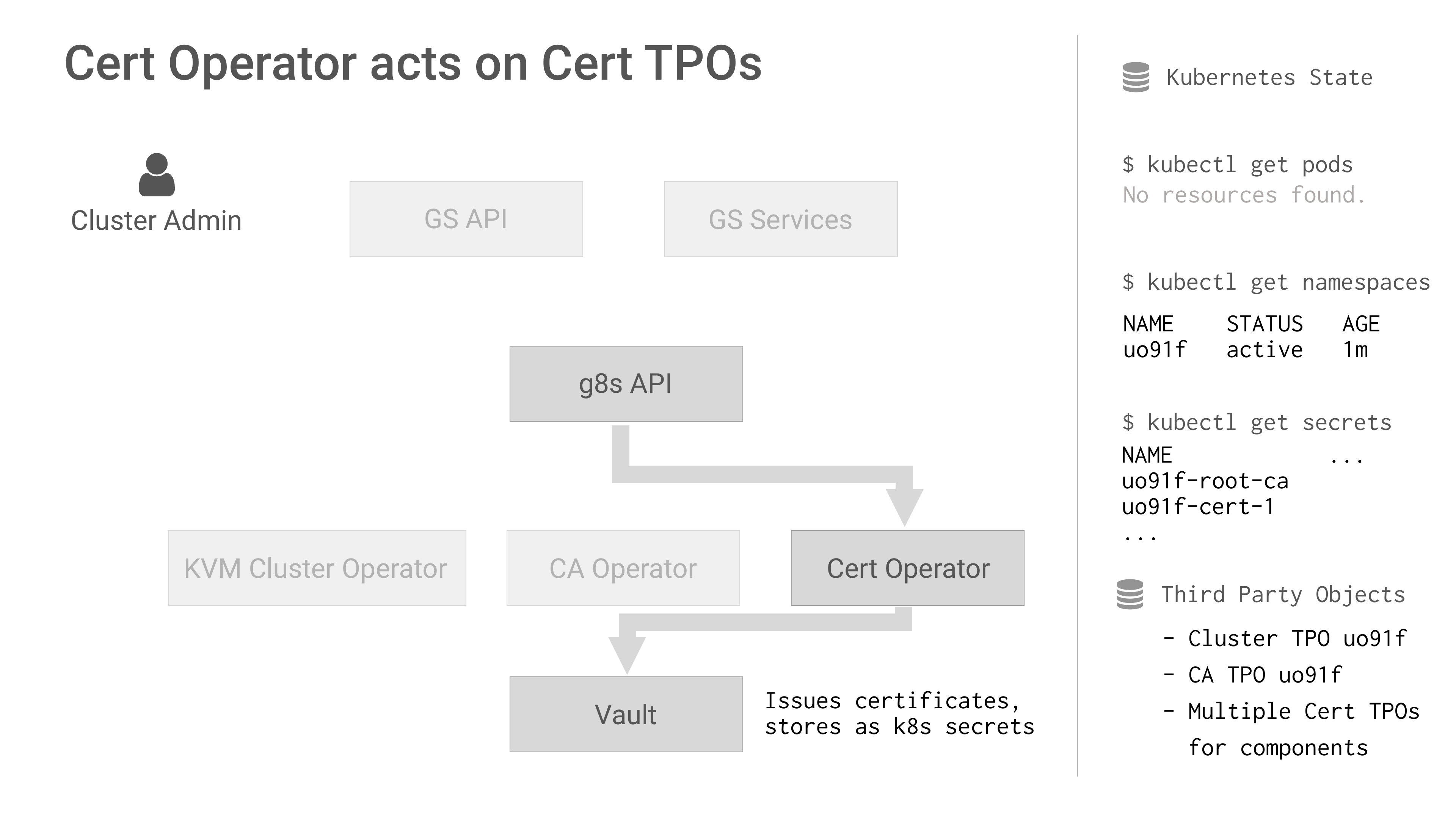

The cert-operator has a simple task. It watches for new certificatetpr Third Party Objects (TPOs), issues the certificate using Vault and stores it as a Kubernetes secret. We use it to create certificates for Kubernetes components (API, etcd, kubelet, etc.) that our cluster operators need. In this case the AWS and KVM operators watch for these secrets and use them when launching clusters.

The certificatetpr resource has a Spec that configures the certificate including the Common Name, any Alternative Names and the TTL. On G8s we create the certificate TPOs (Third Party Objects) using one of our internal microservices.

Before issuing a certificate the operator checks if a PKI backend exists in Vault for the Cluster ID specified in the TPO spec. If it doesn’t exist then a new PKI backend is created. This ensures that each cluster has its own PKI backend and Certificate Authority (CA) within Vault. For G8s we’re aiming for a micro operator architecture, so we plan to split the CA logic out to a separate CA operator with its own CA TPR. These micro operators can then collaborate by watching for TPRs as well as built in K8s resources like secrets and config maps.

As we started to develop multiple operators we quickly saw code duplication and small differences in approach between our operators. For microservices we’ve developed microkit, which is an opinionated framework for developing microservices that is built on top of the go-kit framework.

To help with building our operators we’re developing operatorkit, which is a framework for building operators. So far it has a client for managing TPRs, a client for connecting to the k8s API using client-go, and we’re adding support for watching TPRs next.

As mentioned earlier developing operators for Kubernetes is still a new technique. There are now a wide variety of operators performing different tasks and developed using different approaches. Lack of documentation is a problem but the situation is slowly improving and the client-go repo now has an example for third party resources which includes a controller.

We use the k8s.io/client-go package for developing our operators. This is very large as its extracted from the main Kubernetes repo. This results in a very large vendor directory and slow compilation times. We’ve experimented with the more lightweight k8s client from Eric Chiang at CoreOS. However it was missing some support for TPRs so for now we’re sticking with client-go.

Third Party Resources are still alpha in Kubernetes 1.6 and as you’d expect there are still a few rough edges. For example the watch URLs for TPRs use plurals but reverse engineering these in code can be painful. e.g the plural for awstpr and awses but for kvmtpr its kvms. We’ve built support for this into operatorkit but the beta proposal for TPRs will let you specify the plural name for a TPR yourself.

Furthermore, Kubernetes 1.7 is deprecating TPRs and replacing them with Custom Resource Definitions (CRDs), so there’s some migration to be done. Being able to manage this migration in operatorkit and use it in all our operators would be a goal for future development.

We’re continuing to extend operatorkit as we develop more operators. Soon we’ll be adding support for reconciliation loops. This ensures when a TPO is created or deleted that all the necessary k8s resources are also created or deleted and is something we need for our KVM operator.

We’re also developing more micro operators. Currently we’re developing a flannel-operator that removes flannel bridges. This is a cleanup task when KVM clusters are deleted and is an example of the type of cluster admin task that can be automated using operators. We also have plans for a dns-operator for creating the DNS records needed by our guest clusters.

These Stories on Tech

A look into the future of cloud native with Giant Swarm.

A look into the future of cloud native with Giant Swarm.

A Technical Product Owner explores Kubernetes Gateway API from a Giant Swarm perspective.

We empower platform teams to provide internal developer platforms that fuel innovation and fast-paced growth.

GET IN TOUCH

General: hello@giantswarm.io

CERTIFIED SERVICE PROVIDER

No Comments Yet

Let us know what you think